Article Highlights:

- With few options for monitoring live ML models, Anodot’s data science team developed and released a solution called MLWatcher

- MLWatcher collects and monitors metrics from machine learning models in production.

- This open source Python agent is free to use, simply connect to your BI service to visualize the results.

- To detect anomalies in these metrics, either set rule-based alerting, or sync to an ML anomaly detection solution, such as Anodot, to execute at scale.

Machine Learning (ML) algorithms are designed to automatically build mathematical models using sample data to make decisions. Rather than use specific instructions, they rely on patterns and inference instead.

And the business applications abound. In recent years, companies such Google and Facebook have found ways to use ML to utilize the massive amounts of data they have for more profit. ML is increasingly used across products and industries – including ad serving, fraud detection, product recommendations and more.

Tracking and Monitoring: The Missing Step

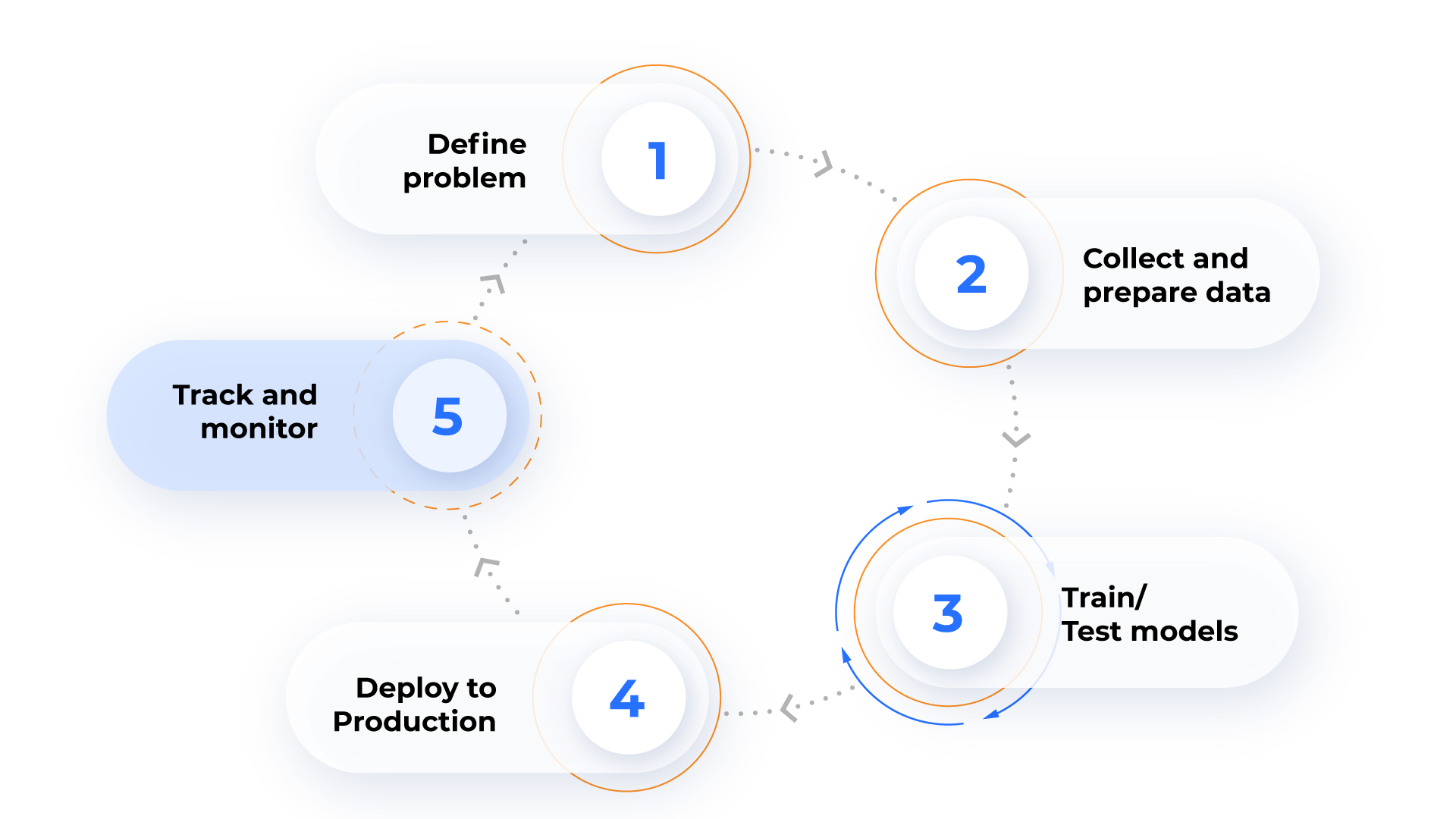

The ML development process is set up to define a particular problem, collect and prepare any relevant data, train and test models, deploy them to production, and then track and monitor them for accuracy.

The last step in the development process, however, is the one where organizations so often fail. From biased training sets to problems with input features to not understanding context (i.e., ‘dumb AI’), problems abound when it comes to correctly and accurately tracking and monitoring data.

A B2C company, for example, may experience an increase in churn because certain input features may be missing, causing the churn predictor to generate errors; an ad campaign could unnecessarily burn through their budget because of an incorrect configuration of a bidding algorithm; or an eCommerce site may experience a sudden decrease in purchases because the new version of a recommendation engine was deployed with a bug.

The impact of these mistakes on an organization can be significant and costly.

Take for example Google’s “night of the yellow ad“, where publishers saw mysterious yellow ads appearing across their sites due to a training mistake.

And then there was the “South Park” episode that exposed a vulnerability in Alexa devices across America when it used a character’s voice on screen to activate users’ shopping lists off screen.

Mistakes in the ML process can be challenging to identify and resolve, as they don’t often throw straightforward errors or exceptions, but do affect the end results of a project and consequently the derived insights that organizations are trying to achieve.

Proactively Monitoring and Tracking for Unexpected Changes

The first step involves gathering the input and output of the models in the Production environment; the second requires measuring statistics over time – including the distribution of feature values and prediction classes; and the third requires proactively monitoring and tracking metrics for any anomalies.

The Solution: Closing the Visibility Gap in Production

MLWatcher is a Python agent that was designed by Anodot to close a critical visibility gap in the ML development process; specifically, it helps the data science community monitor running ML algorithms in real time.

MLWatcher computes times series metrics describing the performance of your running ML classification algorithm, and enables you to monitor the following in real time:

- Output distribution of classifiers’ predictions

- Distribution of the input features

- Accuracy measures of the classifier: To monitor accuracy, precision, recall, and the f1 of your predictions vs. labels, when the true labels are known.

Once computed, the metrics can be sent to any monitoring system for visual inspection or other type of evaluation. One of the built-in outputs is for the Anodot anomaly detection service.

Automatically Detecting Issues Within ML Models: Anodot Provides the Missing Link

Tracking for unexpected changes in ML model performance can be done using a manual solution, using dashboards and rule-based alerting, but is hardly a scalable one. To monitor multiple production models efficiently, we recommend using another set of ML algorithms to do the work for you – namely, automated anomaly detection.

Anodot’s MLWatcher is generic and easy to integrate. This open source software is available on GitHub and Anodot users can easily sync it to our platform to detect and alert on anomalies in their ML models.