Blog Post

5 min read

Unexpected plot twist: understanding what are data outliers and how their detection can eliminate business latency

Business metric data is only as useful as the insights that can be extracted from them, and that extraction is ultimately limited by the tools employed. One of the most basic and commonly used data analysis and visualization tools is the time series: a two-dimensional plot of some metric’s value at many sequential moments in time. Each data point in that time series is a record of one facet of your business at that particular instant. When plotted over time, that data can reveal trends and patterns that indicate the current state of that particular metric.

The basics: understanding what data outliers are

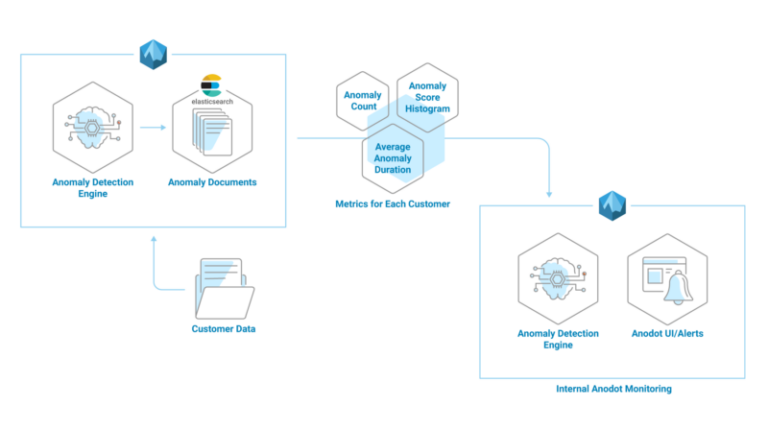

A time series plot shows what is happening in your business. Sometimes, that can diverge from what you expect should happen. When that divergence is outside the usual bounds of variance, it’s an outlier. In Anodot’s outlier detection system, the expectations which set those bounds are derived from a continuous examination of all the data points for that metric.

In many situations, data outliers are errant data which can skew averages, and thus are usually filtered out and excluded by statisticians and data analysts before they attempt to extract insights from the data. The rationale is that those outliers are due to reporting errors or some other cause they needn’t worry about. Also, since genuine outliers are relatively rare, they aren’t seen as indicating a deeper, urgent problem with the system being monitored.

Outliers, however, can be significant data points in and of themselves when reporting or when other sources of error aren’t suspected. An outlier in one of your metrics could reflect a one-off event or a new opportunity, like an unexpected increase in sales for a key demographic you’ve been trying to break into.

Outliers in time series data mean something has changed. Significant changes can first manifest as outliers when only a few events serve as an early harbinger of a much more widespread issue. A large ecommerce company, for example, may see a larger than usual number of payment processing failures from a specific but rarely used financial institution. The failures were due to the fact that they updated their API to incorporate new regulatory standards for online financial transactions. This particular bank was merely the first to be compliant with the new industry-wide standard. If these failures get written off as inconsequential outliers and not recognized as the canary in a coal mine, the entire company may soon not be able to accept any payments, as every bank eventually adopts the new standard.

Outliers to the rescue

At Anodot, we learned firsthand that an entire metric for a particular object may be an outlier, compared to that identical metric from other similar objects. This is a prime example of how outlier detection can be a powerful tool for optimization: by spotting a single underperforming component, the performance of the whole system can be dramatically improved.

For us, it was a degradation of the performance from a single Cassandra node. For your business, it could be a CDN introducing unusually high latency, causing web page load times to rise, becoming unbearable for your visitors as they click away and fall into someone else’s funnel.

Anodot’s outlier detection compares aspects that are supposed to behave similarly and identifies the ones that are behaving differently: a single data point which is unexpectedly different from the previous ones, or a metric from a particular aspect which deviates from that same metric from other identical aspects.

Context requires intelligent data monitoring

Anomalous data points are classified in the context of all the data points which came before. The significance of detected anomalies is then quantified in the context of their magnitude and persistence. Finally, concise reporting of those detected significant anomalies is informed by the context of other anomalies in related metrics. Context requires understanding… understanding gleaned from learning. Machine learning, that is. Even though there are several tests for outliers which don’t involve machine learning, they almost always assume a standard Gaussian distribution (the iconic bell curve), which real data often doesn’t exhibit.

But there’s another kind of latency which outlier detection can remove: business latency. One example of business latency is the lag between a problem’s occurrence and its discovery. Another is the time delay between the discovery of the problem and when an organization possesses the actionable insights to quickly fix it. Anodot’s outlier detection system can remove both: the former by accurate real-time anomaly detection, the latter by concise reporting of related anomalies. Solving the problem of business latency is a priority for all companies in the era of big data, and it’s a much harder problem to solve with traditional business intelligence (BI) tools.

Traditional BI: high latency, less results

Traditional BI is not designed for real time big data, but rather for analyzing historical data. In addition, they simply visualize the given data, rather than surface issues that need to be considered. Therefore, analysts cannot rely on BI solutions to find what they are looking for, as they first must understand what they need to find. Using traditional BI, analysts may identify issues late, if at all, which leads to loss of revenue, quality, and efficiency. Speed is what’s needed – that one essential component for successful BI alerts and investigations.

And that speed can make your business an outlier - way above your competition.

Read more