Ad Campaigns: How to Reduce False Positive Alerts for Ad Budget Caps

The massive scale and speed of online advertising means that adtech companies need to collect, analyze, and act upon immense datasets instantaneously, 24 hours a day. The insights that come from this massive onslaught of data can create a competitive advantage for those who are prepared to act upon those observations quickly.

Traditional monitoring tools such as business intelligence systems and executive dashboards don’t scale to the large number of metrics adtech generates, creating blind spots where problems can lurk. Moreover, these tools lead to latency in detecting issues because they act on historical data rather than real-time information.

Anodot’s AI-powered business monitoring platform addresses the challenges and scale of the adtech industry. By monitoring the most granular digital ad metrics in real time and identifying their correlation to each other, Anodot enables marketing and PPC teams to optimize their campaigns for conversion, targeting, creative, bidding, placement, and scale.

False positive alerts steal time and money from your organization

In any business monitoring solution, alerts for false positive anomalies or incidents are troubling in several ways. First of all, they divert attention from investigating or following up on positive anomalies detected. The fact is, no one knows the difference between a false positive and a true positive until at least some investigative work is done to determine the real situation. In the case of false positives, this is time (and money) wasted while true positives are on the back burner waiting for the resources to investigate them. Time lost = money lost in the adtech industry.

Too many false positive notifications create alert fatigue and eat away at confidence in the monitoring solution. Analysts may begin to doubt what is found and ignore the alerts—thus real problems are not being found and mitigated.

When excessive false positive alerts are issued, the monitoring solution needs to be tuned in terms of the detection logic in order to reduce the noise and improve accuracy. This is precisely what happened in a recent case with an Anodot adtech client, and the resulting fix will help anyone in adtech and marketing roles.

Capped campaigns create false positives in business monitoring

In this scenario, an adtech company’s account managers are responsible for helping their customers manage campaign budgets and allocate resources in order to attain optimal results. Working closely with Anodot, this company has set alerts for approximately 7,000 metrics to monitor for changes in patterns and to detect any technical issues that might result in unexpected drops in their impressions, conversions, and other critical KPIs. It’s all very standard for any adtech company.

So what’s the issue? Capped campaigns create false positives.

Many of this company’s customers have a predetermined budget for each campaign that is used to pay for the various paid ads, clicks, impressions, conversions, and so on. When the budget is exhausted, or nearly so, the account manager is notified by an internal system. At the same time, there’s a rather large drop for the relevant KPIs that are being measured and monitored, which makes sense given that no additional money is being put toward the purchase of ads. This usually happens without a relevant detectable pattern.

While the account manager expects the drop in KPIs, the business monitoring system does not—and thus the detected drop in KPIs appears to be an anomaly. The system often sends a corresponding alert, which in this case is a false positive because the drop was expected by the account manager.

Capped campaigns are not unusual in this industry, so the monitoring system needs to be tuned to accommodate these occurrences to reduce the number of false positive alerts.

Anodot’s unique approach eliminates false positives in capped campaigns

Anodot’s first attempt to resolve the issue was to add the capped events as an influencing event. This failed to fix the issue because the influencing event did not correlate to a specific metric, only to an alert. The result was still false positive alerts which often went to many people, resulting in redundant “noise.”

A successful resolution came when Anodot suggested sending notice of the capped event as an influencing metric in its own right so that it can be correlated on the account dimension level or on a campaign ID. So, the adtech company sends a metric – “1” for a capped event, “0” for uncapped – via an API to Anodot. The API call is triggered on each significant KPI change. In response, a watermark is sent respectively to close the bucket, ensuring the metric’s new value to be registered in the quickest way possible.

When a KPI drop occurs, Anodot looks for the corresponding business metric of the capped event on an account level. If the latter metric contains a “1” then no alert is triggered because the system now knows this is a capped campaign. The influencing metric will go back up to the last 10 data points, looking for the last one reported, meaning that if Anodot gets the capped event before the drop is reported, Anodot is still able to detect it.

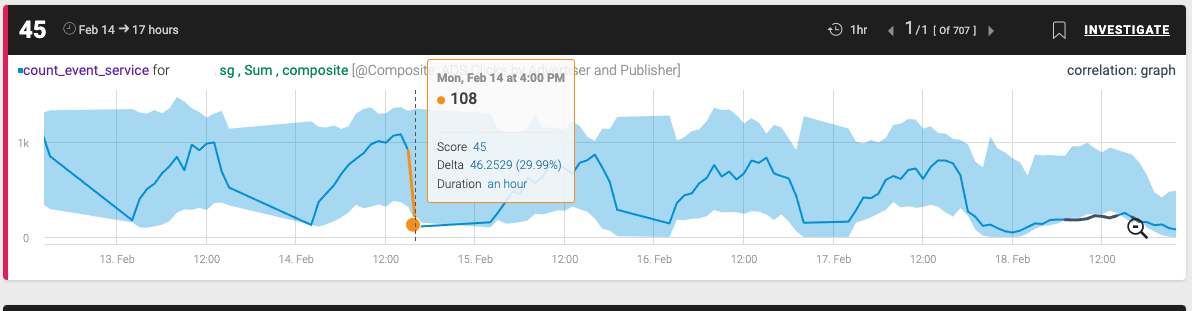

The illustrations below show how this technique prevents false positive alerts. The anomaly of the dropping KPI is detected in the orange line.

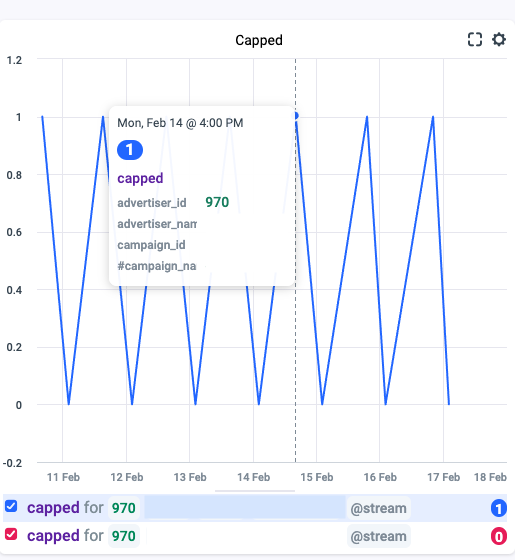

The corresponding capped campaign metric is reported in the image below.

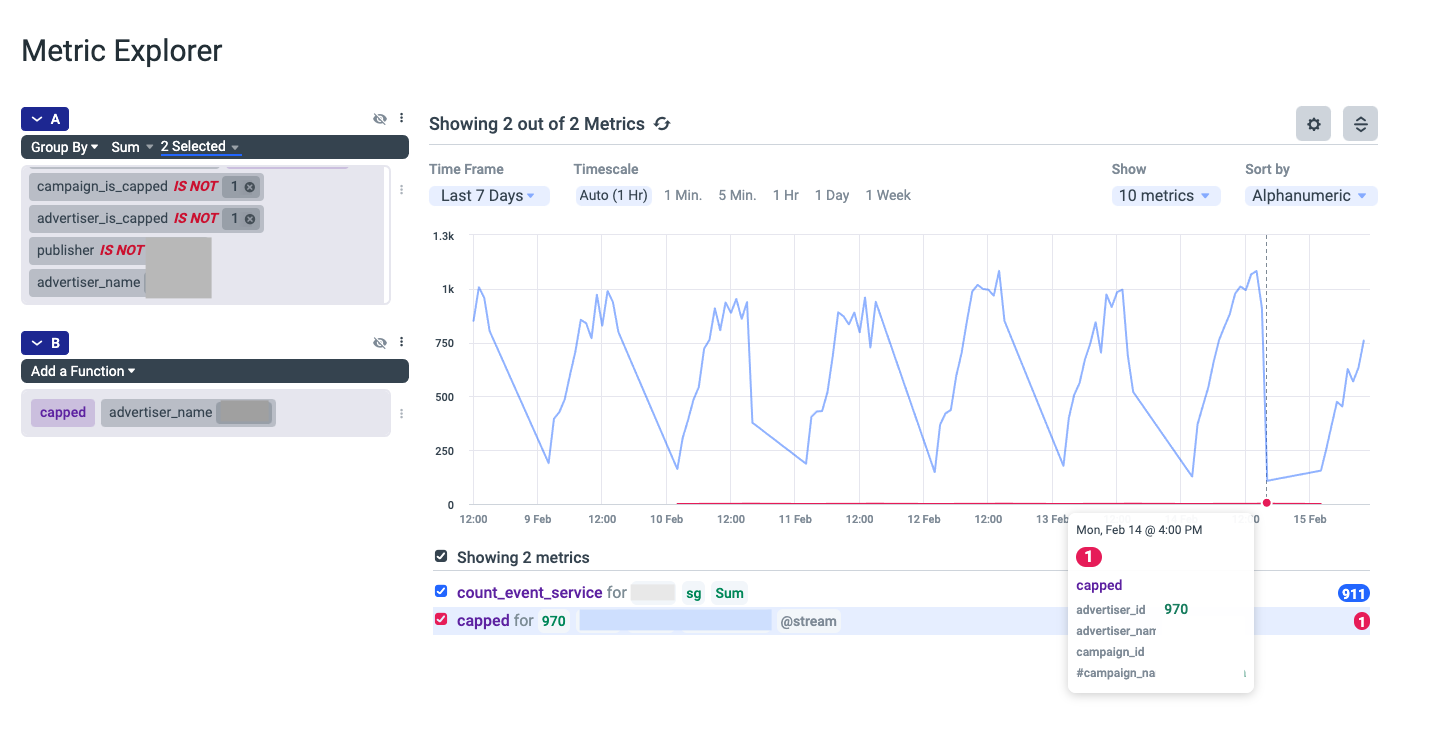

When the metrics are correlated and placed side by side, the resulting image looks like this:

Note the lack of the orange line indicative of an anomaly that will trigger an alert.

Anodot’s approach works for any company with capped campaign budgets

While Anodot designed this approach for a specific client’s needs, it has application for other companies in adtech that have capped campaigns. The goal is to eliminate the false positives that arise from campaigns reaching their end budget, causing a drop in KPIs like CTR, impressions, revenue, and so on.

The adtech company must have granular campaigns data, registering both capped and uncapped events to be sent to Anodot via API. Seasonality is recommended on the campaigns being monitored, meaning that capped and uncapped events are to be sent to Anodot at the same intervals as the campaigns; for example, every 5 minutes, hourly, etc.

The process is easy to set up, with campaign monitoring as an existing condition. The first step is to send the capping events as metrics (0 or 1) with the relevant dimension property, such as the account ID, campaign name, or campaign ID. Next, Anodot will use the capped metrics as influencing metrics within the alert.

If this sounds like a scenario that will help your company reduce false positive alerts while monitoring campaign performance, talk to us about how to get it set up. By eliminating false positives, your people can concentrate on what’s really important in monitoring performance.