Amazon S3 Explained

Amazon Simple Storage Service (S3) is an essential cornerstone of AWS and among its most popular service offerings. S3 allows tenants to store, secure, and retrieve data from S3 buckets on demand. It is widely used for its high availability, scalability, and performance. It supports six storage classes and several use cases, including website hosting, backups, application data storage, and data lake storage.

There are two primary components of Amazon S3: Buckets and Objects. Users create and configure S3 buckets according to their needs, and the buckets store the objects they upload in the cloud.

The six storage classes of Amazon S3 and the price differentiation

While S3 prides itself on its simplicity of use, choosing the correct storage class isn’t always as easy and can have a tremendous impact on costs. The free tier limits storage to 5GB in the standard class, but it’s only available for new customers. AWS has six S3 storage classes above the free tier: Standard, Intelligent Tiering, Infrequent Access, One-Zone Infrequent Access, Glacier, and Glacier Deep Archive. Each one offers different features, access availability, and performance. Here is an overview of each class:

Standard

S3 standard storage is best suited for frequently accessed data. It’s elastic in that you only pay for what you use, and customers typically use it for data-intensive content that they want access to at all times, from anywhere.

Infrequent Access Storage

S3 Infrequent Access Storage is best suited for use cases where data access requirements are ad hoc or infrequent and available quickly when needed. An example could be backup and recovery images for a web or application server. The cost model for infrequent storage is cheaper than standard storage but scales more each time you access the data.

One-Zone Infrequent Access

The “regular” Infrequent Access Storage ensures the highest availability by distributing data between at least three availability zones within a region. For use cases where data access is infrequent, lower availability is acceptable, but that still need quick retrieval times, One-Zone Infrequent Access Storage is the best option. S3 will store the data in one availability zone, but the cost will be 20% less than Infrequent Access Storage.

Intelligent Tiering

Amazon offers a premium S3 service called Intelligent Tiering. It analyzes usage patterns and automatically transfers data between Standard and Infrequent tiers based on access requirements. The selling point of this tier is it saves operators the labor of monitoring and transferring the data themselves. That said, it comes with a charge of $.0025 for every thousand items monitored.

Glacier

Most customers use S3 Glacier for record retention and compliance purposes. Retrieval requests take hours to complete, making Glacier unsuitable for any use case requiring fast access. That said, the lower cost makes it ideal when access speed isn’t a concern.

Glacier Deep Archive

S3 Glacier Deep Archive offers additional cost savings but carries further data access limitations. Deep archive is best suited for data that customers only need to access 1-2 times per year and when they can tolerate retrieval times upwards of 12 hours.

How to Reduce AWS S3 Spending

AWS S3 owes its popularity to its simplicity and versatility. It helps companies and customers across the globe store personal files, host websites and blogs, and empower data lakes for analytics. The only downside is the price tag, which can become pretty hefty in a hurry depending on how much data is stored and how frequently it’s accessed.

Here are some helpful tips for reducing AWS S3 Spend:

Use Compression

AWS bases so much S3 cost on the amount of data stored, so compressing data before uploading into S3 can reap significant savings. When users need to access the file, they can download it compressed and decompress it on their local machines.

Continuously monitor S3 objects and access patterns to catch anomalies and right-size storage class selections

Each storage class features different costs, strengths, and weaknesses. Active monitoring to ensure S3 buckets and objects are right-sized into the correct storage class can drastically reduce costs. Remember that you can leverage multiple tiers within the same bucket, so make sure all files have the right tier selected.

Remove or downgrade unused or seldom-used S3 buckets

One common mistake in managing S3 storage is users will delete the contents of an S3 bucket, leaving it empty and unused. It’s best to remove these buckets entirely to reduce costs and eliminate unnecessary system vulnerabilities.

Use a dedicated cloud cost optimization service rather than relying only on cloud provider tools

The most important recommendation we can make to keep cloud costs under control is to use a dedicated, third-party cost optimization tool instead of relying strictly on the cloud provider. The native cost management tools cloud providers offer do not go far enough in helping customers understand and optimize their cloud cost decisions.

– Disable versioning if not required.

– Leverage endpoint technologies to reduce data transfer costs.

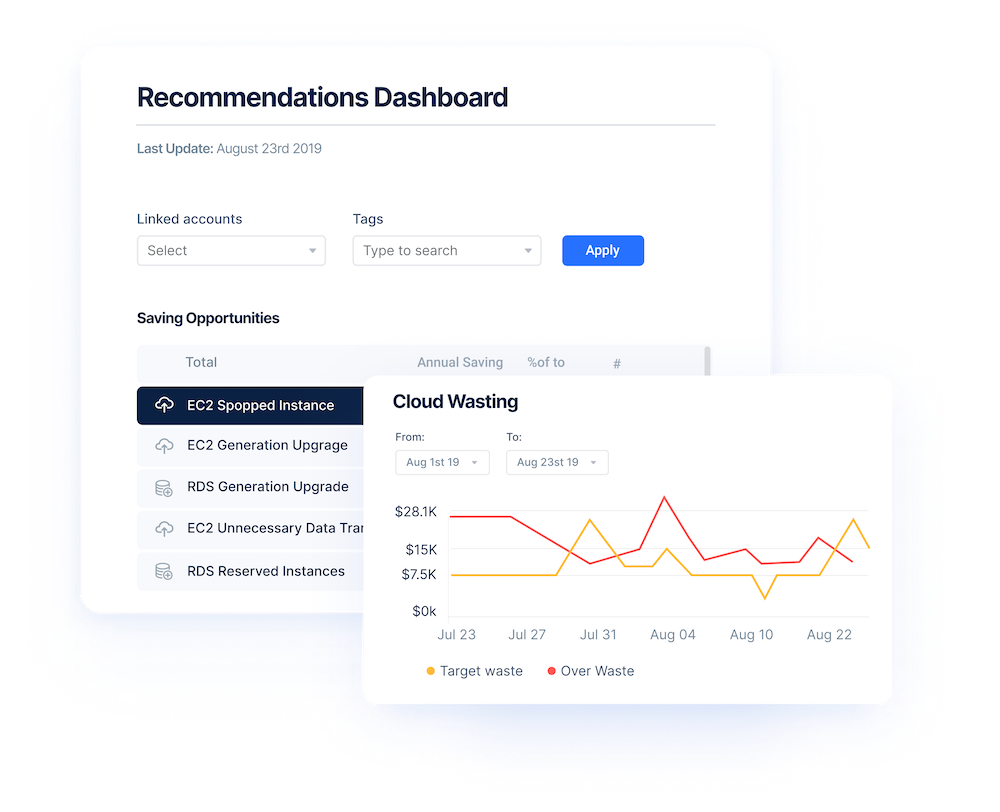

Cloud Cost Management with Anodot

Organizations seeking to understand and control their cloud costs need a dedicated tool. Anodot’s Cloud Cost solutions easily connect to cloud providers like AWS to monitor and manage cloud spending in real-time and alert teams to critical cost-savings recommendations.

Here are some of the key features:

Anodot makes lifecycle recommendations in real-time, based on actual usage patterns and data needs. Rather than teams manually monitoring S3 buckets and trying to figure out if and when to switch tiers, Anodot provides a detailed, staged plan for each object considering patterns of seasonality.

Versioning can significantly impact S3 costs because each new version is another file to maintain. Anodot continuously monitors object versions and provides tailored, actionable recommendations on which versions to keep.

Many customers don’t realize how uploading files into S3 can significantly impact costs. In particular, large uploads that get interrupted reserve space until completed, resulting in higher charges. Anodot provides comprehensive recommendations for uploading files and which files to delete in which bucket.

Start Reducing Cloud Costs Today!

Connect with one of our cloud cost management specialists to learn how Anodot can help your organization control costs, optimize resources and reduce cloud waste.