One of Christopher Nolan’s earlier films, “Memento”, really captures the feeling of being a data analyst looking for insights while monitoring growing volumes of data.

How is Lack of Memory like Monitoring Today’s Data?

In “Memento“, the story is revealed (in reverse) about an insurance investigator that suffers from anterograde amnesia (an inability to form new memories) and the film reflects on the difficulty that sufferers have in appreciating the passage of time as they struggle to exist with very limited recent memory. This is dramatically portrayed where every time he meets someone he needs to introduce himself again, even though they may have met and spoke at length shortly before, as though this were the first time.

Once, not so long ago, data analysts and BI managers had a good idea for what was in their data, what was happening in their business operations, and how to respond to changes. Today, sprawling volumes of data can be huge unruly beasts. Business expects to see updates and changes at a faster rates, driving development efforts, putting operations under further pressure to stay on top of all this data and …the changes that happen over time.

For BI managers surveying today’s data collections, the view can be overwhelming. Across a landscape filled with cloud-based businesses, data analysts can struggle to gain visibility into an increasingly difficult and distorted field, unable to determine exactly what they know about their applications and how to best manage them. It’s a complex issue for any organization.

That’s what made me think of the memory condition in “Memento”. The data being collected is changing so fast with so many issues and metrics to keep track of. So that what you may have seen or understood just a few hours ago, could have changed completely, just like how “Memento” portrayed one man’s unreliable memory.

Overwhelmed by Data

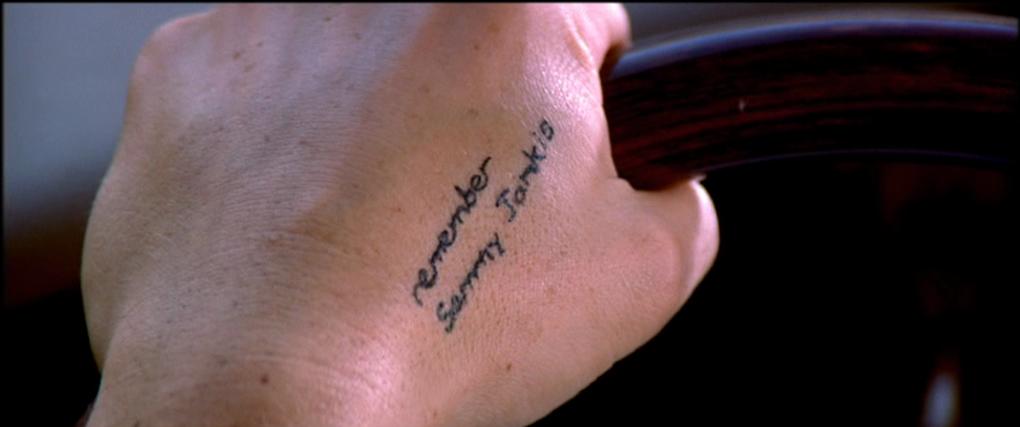

In the movie, the main character, Leonard Shelby, described a case that he once investigated involving Sammy Jankis. Sammy had been in an accident, leaving him with the condition of being unable to create new memories. He could start a conversation, but would get confused in the middle, not knowing what he was talking about. He couldn’t follow the plot of a TV show. Leonard described how Sammy tried to compensate for this situation, “He tried writing himself notes. Lots of notes. But he’d get confused.”

This reminded me of how the tools available for data management approach the challenge. Tools, like data visualizations and dashboards attempt to collect enormous amounts of data. Yet they lack the real-time analytic capabilities to make sense of all this information, or make it useful at the time that managers need it.

Unreliable Tools for Big Data

The main character of “Memento” declared that he had a more successful approach than taking notes: “I’ve got a more graceful solution to the memory problem. I’m disciplined and organized. I use habit and routine to make my life possible.”

The main character’s buddy and confidante however pointed out the main flaw in his approach saying, “You can’t trust a man’s life to your little notes and pictures. Why? Because you’re relying on them alone. You don’t remember what you’ve discovered or how. Your notes might be unreliable.”

I was struck by this situation was similar to how the tools in place for data management, like traditional BI tools, are meant to present to operators an accurate representation of what is happening now, despite the fact that new data is being collected at breakneck speeds. These tools can lead to false sense of comfort – that you have actually automated your data management efforts, and everything is under control as planned – with no surprises.

Well one of the problems is that you need to track thousands or even millions of metrics with updates often by the minute, across global operations, where you are only modeling what you know. Beyond the mere number of metrics in many businesses, is the complexity of each individual metric: different metrics have different patterns (or no patterns at all) and different amounts of variability in the values of the sampled data. In addition, the metrics themselves are often changing, often exhibiting different patterns as the data adjusts to set a new “normal.”

Nonetheless, some of the most valuable metrics to measure are also the most variable, if they can be properly addressed.

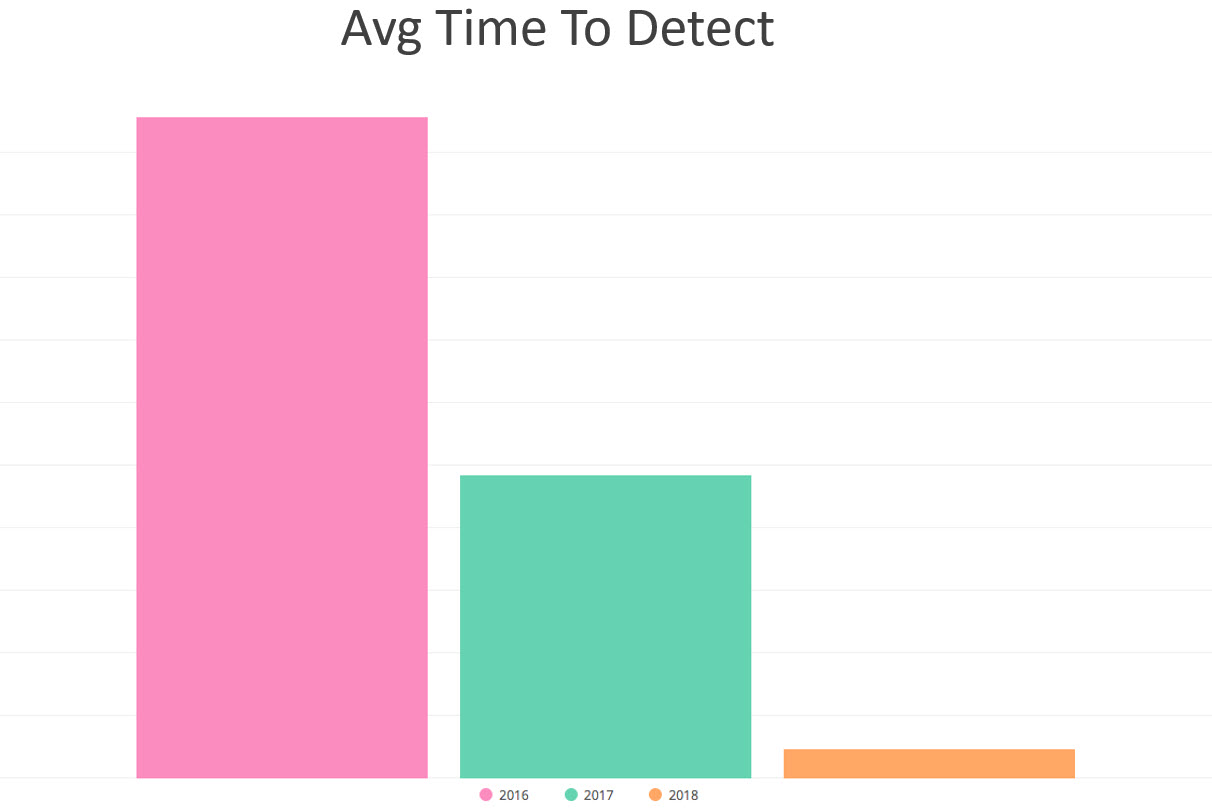

For example, the creator of Candy Crush: King games, reported that they collected about 40 billion events every day from all their games. Through analysis, this data was distilled and filtered into just 45 daily anomalies. Out of this, on average, 1.2 were considered high severity incidents. As King’s Product Owner of Business Intelligence, Lukasz Korbolewski, shared, “Using Anodot we are seeing time to detect of 20% [better] of the previous. So it’s 80% improvement to the previous year.”

Data Management Guesswork and Getting out of Memento Mode

If you haven’t seen “Memento”, I won’t give away the twists and the ending. I’ll just say that in this situation, the main character, despite his convictions that he has found a way to cope with his condition, is still left blindly guessing at how to navigate his life, hardly aware of what he has achieved from moment to moment or day to day.

Data management needs to eliminate the guesswork – the “Memento” mode, and take on the complexity and dynamics of today’s immense volumes of data.

A meaningful autonomous analytics solution can help to surface actionable business insights, rather than generating more big data dashboards and reports to scour and review. Highly scalable machine learning-based algorithms that can learn the normal pattern of any number of data points and correlate different signals can help to accurately identify anomalies (those surprises we never expected) that require immediate action or investigation, before hurting revenue.

Automated anomaly detection can free your talented data analysts from the futile task of manually trying to catch critical anomalies while the business marches forward, sometimes at breakneck speed.

Get out of “Memento” mode and don’t be left guessing what’s happening with your business’ key data.