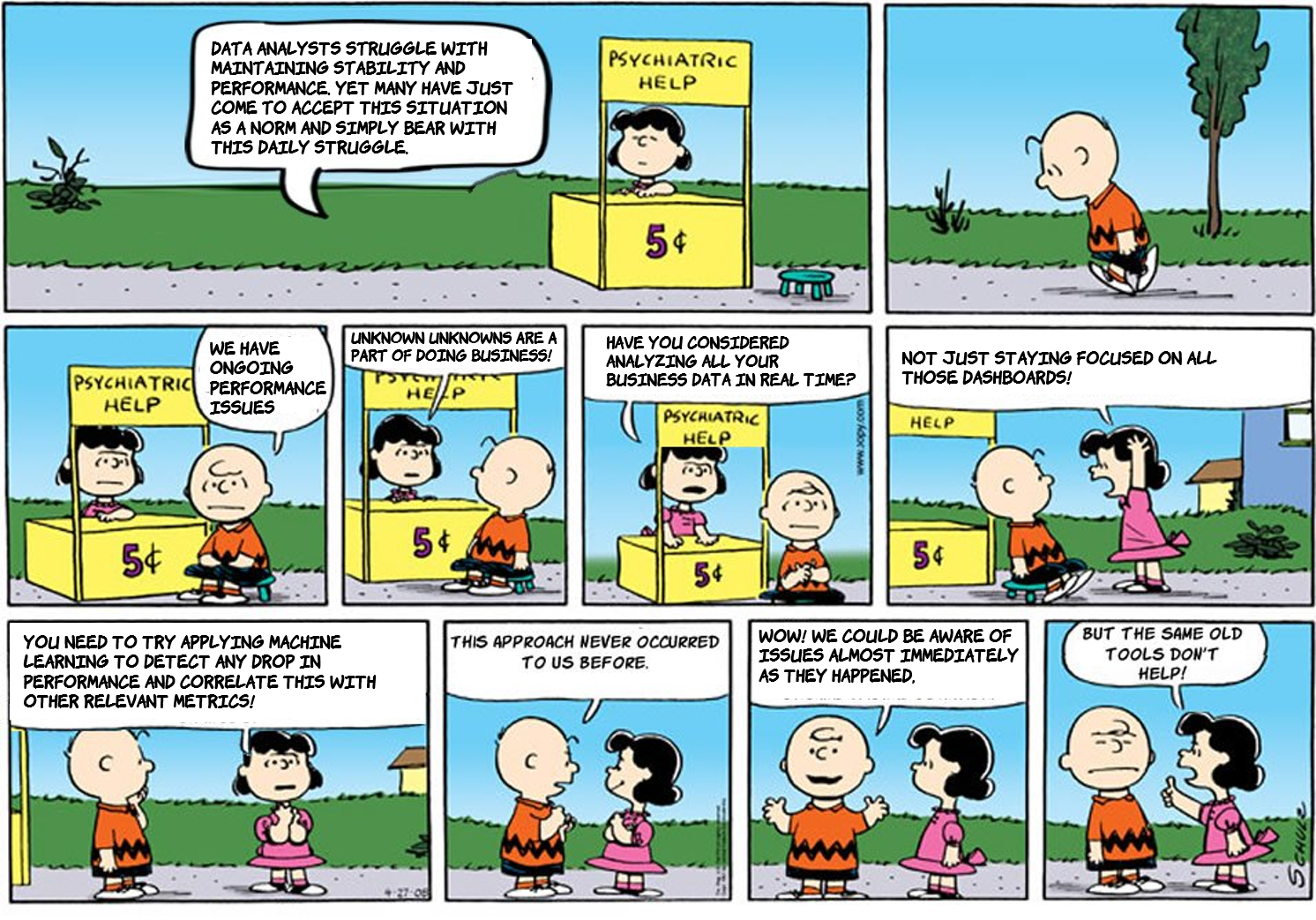

What’s surprising to see today is how business operations struggle to get an integrated view of all business metrics. With greater volumes of data being collected, data analysts just can’t keep up with the pace. This state of affairs alone doesn’t hit as hard as the fact that many in data analytics have just come to accept this situation as a norm and simply bear with this daily struggle. They haven’t even considered how to improve or enhance the state of their data infrastructure management.

Unknown Issues

The increasingly agile, data-driven approach to development, parsing user data and releasing new versions regularly, has left many in data operations at a loss. Inundated with tons of data, this has to be refined into business insights in order to be of any real value. Yet for business, analyzing streaming data in real time, continuously generated from thousands of data sources, is not a simple prospect.

While data operations teams strive for 100% service availability, constant changes or unknown unknowns – the things we don’t know we don’t know – can disrupt availability and performance. For business, few surprises can be good, like discovering that a business process error has prevented your customers from completing their online orders for over a week. The problem is we don’t know what we don’t know, until it’s too late.

So how do you identify unknown unknowns in a timely way and avoid complex, revenue-losing problems that may persist right under your nose?

Maintaining Environment Consistency

With the increased pace of changes streaming into the business system environment, most of the traditional tools for this type of monitoring focus on what has happened already, so there is a built-in delay between when something important happens, and when it may (or may not) be discovered via the traditional monitoring process. Data analysts can’t rely upon what has happened in the past will occur again in the future. Today’s organizations need to leverage data in real time – to keep a game engaging, to drive revenues, to get better performance results, etc.

The dynamic nature of data and the constant new services and products entering the market every quarter, Data Operations needs to comprehensively understand and use the proper tools to monitor the ebb and flow of company data including business anomalies, trend changes, changes in predictions, etc.

Investigating Incidents As They Occur

When an incident occurs, data analysts end up spending a lot of time investigating issues that are hidden within the vast volume of data and events, and often don’t know what to look for. Detecting and investigating issues that are somewhat hidden is a difficult task, especially if you don’t know what to look for beforehand.

At the scale of millions of metrics, manual model selection, re-selection and tuning is impossible. Exponentially more time and money would be spent on managing such a manual system than would be spent investigating and acting on the insights generated by it.

Same Old Tools?

Many end users aren’t looking to dashboards because they really use them, rather they are hoping to get a sense of security (incorrectly) that they will know everything about their business. This focuses on one of the weaknesses of traditional business intelligence tools, where even at peak performance, traditional BI tools can miss key issues.

Through many layers of business of technology, traditional BI analytics tools often encounter unpleasant surprises, discovering issues and opportunities long after the financial or reputational damage is already done. The multitude of specific metrics which arise when a metric like daily revenue is broken down by product, geo region, browser, etc. – expands in granular metrics – highlighting how dimensionality undermines the effectiveness of BI tools.

While monitoring highly dimensional data in real time is a challenge, companies frequently come up against another challenge: detecting variability due to seasonality in their metrics. Having to account for this with traditional analytics tools, overlooks very specific metrics and can result in missing important business incidents. In our fast-paced, multi-faceted world, these data “blind spots” is a major flaw with traditional BI analytics tools, preventing them from delivering actionable insights in real-time – an absolute necessity.

New Approach to Data: Autonomous Analytics

While organizations try to leverage their current analytics solutions, doing all kinds of ‘data dancing’ and developing internal solutions to address issues, this is just a patch.

Data-driven industries need a solution that operates autonomously. ‘Autonomous’ is the key component that an analytics solution needs. While companies have tried to apply BI solutions to solve big data challenges, collecting data from many sources and organizing the data in one place, this doesn’t make Big Data actionable and certainly not in real time.

What is Autonomous Analytics? It is about changing how you approach your data and analytics from ‘Pulling’ to ‘Pushing’. Autonomous analytics is like adding an AutoPilot to business analytics, that can alert you when and where there is a problem, giving you the mastery you need over your business – with self-learning, self-managing, self-service – all in real-time and at web scale.

Autonomous analytics can provide real-time understanding and control for the most critical aspects of the business: revenue, conversion, customer satisfaction, profitability – running continuously and hands-free over all business operations, data, and metrics.

This can tell you what is happening and what will happen, not like today where we need to constantly ask ‘Do you have any idea what’s happening?’ Only with these insights, can you confidently ensure customer satisfaction.