Amazon Simple Storage Service (S3) — one of AWS’ most popular services — is a cloud-based data storage solution that offers highly-scalable, reliable, and low-latency data storage infrastructure.

There are two primary components of Amazon S3:

- Bucket — users create buckets to store their objects

- Objects — objects are files (and their metadata) that are uploaded to a bucket

Amazon S3 owes its popularity to its simplicity and versatility. Amazon S3 supports any use case that makes use of Internet storage, and is the backbone of the back-end data storage architecture for many companies, including many AWS services.

The only downside to Amazon S3 is the cost, which can quickly add up depending on how much data is stored and how often it’s accessed. Keeping costs under control and optimizing them in a way that doesn’t impact application performance or add overhead to operations is essential.

In this post, we’ll discuss the benefits of using Amazon S3 storage, primary cost drivers, and learn how cloud cost management solutions like Anodot can help optimize Amazon S3 costs.

Amazon S3 benefits

Amazon S3 was engineered to meet Amazon’s internal developers’ needs for scalability, reliability, speed, low-cost, and simplicity.

Amazon S3 is highly flexible — you can store any type and amount of data that you want, and access it as frequently (e.g., multiple times a second for real-time analytics) or as infrequently (e.g., once for disaster recovery) as you need. Objects can range in size from one byte to five terabytes, and the total volume of data you can store is unlimited.

Amazon S3 is secure by default — buckets that you create are only accessible by you, and you are in complete control of who has access to your data. Data is securely uploaded and downloaded via SSL endpoints using the HTTPS protocol. Amazon S3 automatically encrypts all data uploads or alternatively, lets you use your own encryption libraries. Finally, Amazon S3 supports user authentication to control access to data, automatically highlights publicly available buckets, and alerts about changes in bucket policies.

Amazon S3 cost drivers

Managing and storing your data on Amazon S3 involves six cost components:

- Storage

- Requests and data retrieval

- Data transfers

- Data management and analytics

- Replication

- Processing data with S3 Object Lambda

Storage costs will account for most of your S3 costs — up to 90%.

You pay for storing objects in your Amazon S3 buckets regardless of usage. Charges depend on your objects’ size, how long you stored the objects during the month, and the storage class.

All regions have similar costs, except for South America (Sao Paulo) which can be 75% more.

Let’s go deeper into each cost driver below to find out how they affect your Amazon S3 costs.

Storage class

Since storage accounts for over 90% of your Amazon S3 costs, choosing the right storage class for your objects can have a big impact on costs — resulting in up to 95% cost savings.

There are per-request ingest charges when moving data into any Amazon S3 storage class. Consider the transfer and request costs before making a transition.

Amazon S3 offers several storage classes to match data access patterns, performance, and cost requirements:

- S3 Standard: Storage for any type of data, typically for frequently accessed data. This is the most expensive storage.

- S3 Intelligent-Tiering: Cost-effective storage for data with unknown or changing access patterns. Data is automatically moved to the most cost-effective access tier when access patterns change, optimizing storage costs. A small fee is charged per object for monitoring and automation, but there are no additional charges for retrieving or moving objects between access tiers.

- S3 Standard-Infrequent Access: Ideal for long-lived objects stored longer than 30 days that are infrequently accessed but need to be available quickly (milliseconds) when needed. Data is stored redundantly across multiple geographically separate Availability Zones.

- S3 One Zone-Infrequent Access: Ideal for long-lived objects stored longer than 30 days that are infrequently accessed but need to be available quickly (milliseconds) when needed. Data is stored redundantly within a single Availability Zone for 20% less cost than S3 Standard-Infrequent Access storage.

- S3 Glacier Instant Retrieval: Ideal for long-lived objects stored longer than 90 days that are accessed once a quarter and must be available quickly (milliseconds).

- S3 Glacier Flexible Retrieval: Ideal for long-term backups and archives with flexible retrieval options ranging from 1 minute to 12 hours. Formerly known as S3 Glacier.

- S3 Glacier Deep Archive: Ideal for long-lived objects stored longer than 180 days that are accessed once or twice a year, and can be restored in 12 hours.

Data retrievals and requests

Retrieving objects stored in infrequent access tiers — S3 Standard-Infrequent Access, S3 One Zone-Infrequent Access, S3 Glacier Instant Retrieval, S3 Glacier Flexible Retrieval, and S3 Glacier Deep Archive — is the next major S3 cost driver.

Changing access patterns are not a good fit for infrequent access tiers because the retrieval fees can increase your costs to more than what you’d pay in S3 Standard. With S3 Intelligent-Tiering, you can save money even under changing access patterns, with no performance impact, no operational overheard, and no retrieval fees.

You also pay for requests made against your S3 buckets and objects — they typically account for less than 1% of your monthly S3 costs, unless you’re uploading large amounts of data or transitioning objects between storage classes.

Data transfer

You pay for all bandwidth into and out of Amazon S3, except for: data transferred into S3 from the Internet, data transferred in the same AWS region (between buckets or to any AWS service), and data transferred out to Amazon CloudFront.

This means that there are additional costs for cross-region data transfer to other AWS services (e.g., EC2, EMR, Redshift, RDS, etc.) and cross-region replication between Amazon S3 buckets. It is essential to place Amazon S3 buckets and AWS resources that access them in the same region to optimize costs.

Management & analytics

You pay for the storage management features and analytics that are enabled on your account’s buckets:

- Amazon S3 Inventory: Manage, audit, and report on S3 objects.

- S3 Storage Class Analysis: Analyze storage access patterns to decide when to transition data to the right storage class.

- S3 Storage Lens: Gain organization-wide visibility into object-storage usage and activity.

- S3 Object Tagging: Categorize your storage using tags.

Amazon S3 replication allows automatic asynchronous copying of objects across buckets. With replication, you pay the Amazon S3 charges for storage in the selected destination S3 storage classes, for the primary copy, for replication PUT requests, and for applicable infrequent access storage retrieval charges.

With cross-region replication, you also pay for inter-region data transfer out from Amazon S3 to each destination region. If you’re using S3 Replication Time Control, you also pay a Replication Time Control Data Transfer charge and S3 Replication Metrics charges.

S3 Object Lambda

When you use S3 Object Lambda, your Amazon S3 GET, HEAD, and LIST requests invoke an AWS Lambda function that you define. This function will process your data and return a processed object back to your application.

You’re charged for the following:

- Per GB-second for the duration of your AWS Lambda function

- Per 1M AWS Lambda requests

- Requests based on the request type, which vary by storage class

- Per-GB for the data S3 Object Lambda returns to your application

Amazon S3 request and Lambda prices depend on the AWS Region, and the duration and memory allocated to the Lambda function.

Bucket and file configuration

How you configure your buckets and files impacts Amazon S3 costs as well:

- Data compression. Storing data in a compressed format (e.g., gzip, bzip, bzip2, zip, 7z, etc.) or a columnar format such as Parquet can further minimize the storage cost.

- File size. When the data type is relatively well known, file size can also affect request costs. Read-intensive workloads can benefit from small file sizes that minimize GET operation costs, while write-intensive operations can benefit from large files that minimize PUT operation costs.

- Data partitioning. SQL queries are often used for reading S3 data. Data in S3 buckets should be partitioned where possible to minimize GET operation cost and improve query performance.

- S3 versioning. Amazon S3 rates apply to every version of an object stored and transferred when versioning is enabled in a bucket. Every version of an object is the whole object, not the delta from the previous version.

- S3 multipart upload. Using multipart upload, you can upload a single object in several parts. If your upload was interrupted before the object was fully uploaded, you’ll be charged for the uploaded object parts until you delete them.

Optimize S3 costs with Anodot

Optimizing Amazon S3 costs without affecting application performance or adding overhead to operations is challenging.

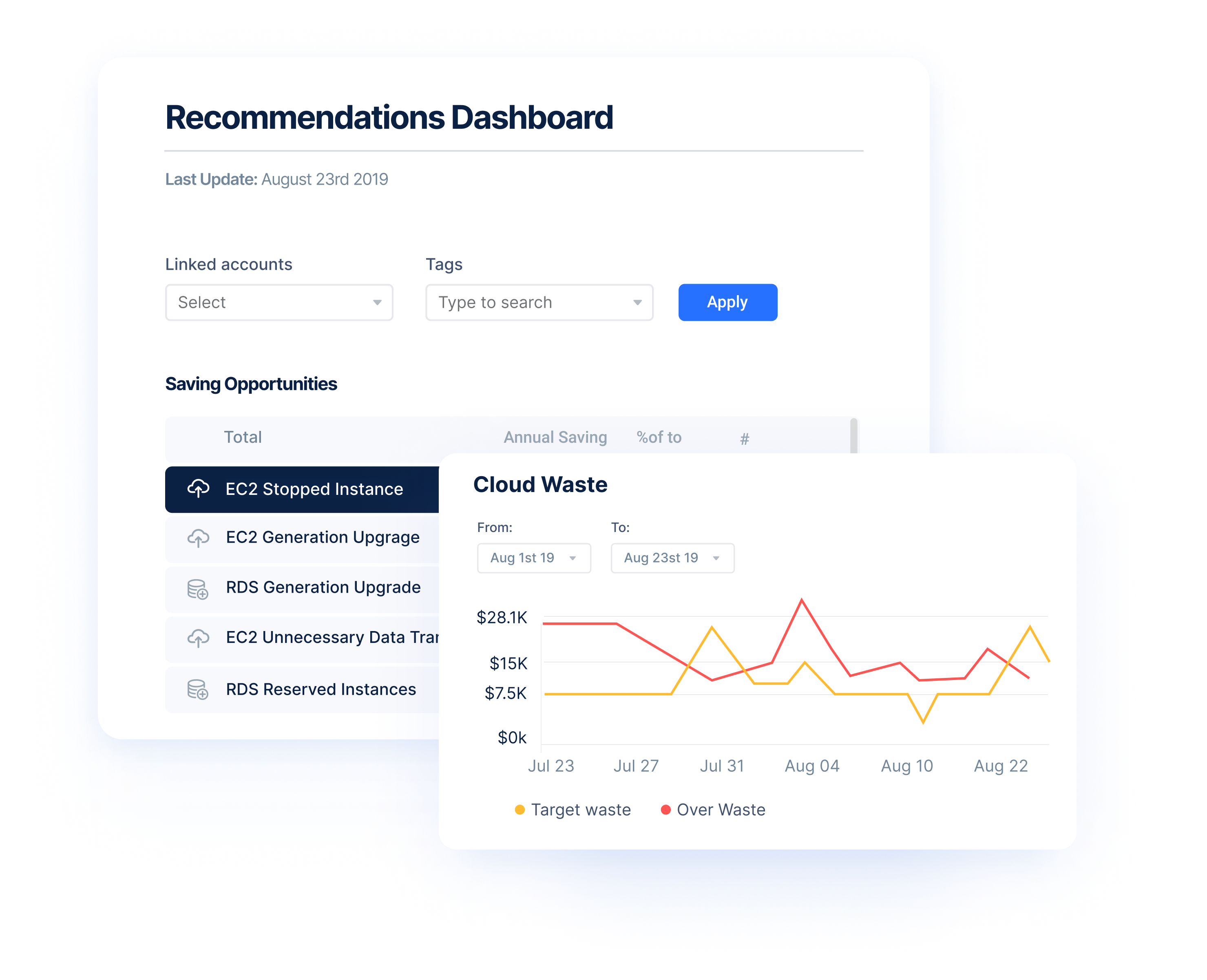

Anodot’s Cloud Cost Management solution makes optimization easy. It can easily connect to AWS, Azure and GCP to monitor and manage your spending. Anodot seamlessly integrates all cloud spending — even in multi-cloud environments — into a single platform, allowing a holistic approach to optimization.

Additionally, Anodot offers specific recommendations for Amazon S3 that can be implemented easily.

Amazon S3 storage class optimization:

Anodot makes storage class recommendations in real-time, based on actual usage patterns and data needs. Anodot provides detailed, staged plans for each object based on seasonal patterns rather than teams manually monitoring S3 buckets.

Amazon S3 management:

- S3 data transfer

- S3 versioning

- S3 multipart upload

- S3 inactive

Anodot’s personalized insights and savings recommendations are continuously updated to consider the newest releases from major cloud providers, including AWS. Anodot helps FinOps teams prioritize recommendations by justifying their impact with a projected performance and savings impact.

Anodot is unique in how it learns each service usage pattern, taking seasonality into account to establish a baseline for expected behavior. Real-time anomaly detection enables Anodot to identify irregular cloud spend and usage, providing contextual alerts to relevant teams so they can resolve issues as soon as possible.

AI and ML-based algorithms provide deep root cause analysis and clear remediation guidance. Anodot helps customers align FinOps, DevOps, and finance teams’ efforts to optimize cloud spending.

Start optimizing your cloud costs today!

Connect with one of our cloud cost management specialists to learn how Anodot can help your organization control costs, optimize resources and reduce cloud waste.